May 15, 2024

Artificial Intelligence has ushered in a brave new world in which both creative and computation processes which used to take days, weeks and months can now be accomplished in minutes. Processes which AI can do as good as or better than most humans. This also includes identity theft. AI is better at forging documents, digitally cloning voices and faces, and hacking systems.

Below are three forms of AI Powered identity theft that consumers, cybersecurity experts, law enforcement bureaus, and companies are scrambling to counter. While the methods to commit identity theft may have changed, the same laws that protect victims against old-fashioned identity theft methods, continue to apply. The lawyers at Schlanger Law Group continue to advocate on behalf of victims, whether the unauthorized charges at issue involve theft of a wallet off a countertop or more cutting edge technologies.

Using AI, a scammer can take a few seconds of someone’s voice found on social media platforms such as YouTube or TikTok and run it through an AI powered software system to clone that person’s voice. The scammer can then type what they want, and the software will speak the typed words in the cloned person’s voice. This is sophisticated AI software also factors in realistic human traits like enunciation and emotional valence. Scammers then attack businesses or consumers posing as trusted people, and trick unsuspecting victims into providing personal information such as passwords or even transferring money to the scammers’ bank accounts.

Wall Street Journal tech reporter Joanna Stern recently used voice cloning to test if it could fool Chase Banks biometric voiceprint security. Stern called Chase customer service and their automated biometric voice print security system asked her to speak her name as a voice print. Stern played a voice clone clip of her speaking her name and the cloned voice passed the security check and was given access to a live Chase bank agent.

One strategy to protect yourself from scams involving voice cloning is to ask your financial institution to add an additional password that is required to be recited by the the accountholder when conducting transactions over the phone. Another is to use a separate, stand alone security token for important accounts. Many banks are happy to issue such tokens (e.g. a security fob), particularly where the account holder has accounts with significant balances. A stand alone token, which is not tethered to the account holders’ phone or wifi connect, can be an important security upgrade as well.

Another famous example of a voice cloning scam is the case of Jennifer Stefano reported by CNN in April of 2023. Stefano received a phone a call on her cellular phone from someone who sounded like her daughter Brie, claiming she had been kidnapped and the kidnappers were demanding money for her life. Jennifer was terrified and convinced it was her daughter Brie talking on the other end of the line. As events unfolded, other family members were alerted, and it became apparent that Jennifer’s daughter was actually safe in her bed, and that Jennifer was being scammed by someone using voice cloning. Experts recommend families institute a ‘family password’ to said over the phone when there is a doubt that the person speaking on the other line is really the family member

If you believe you are a victim of identity theft read our guide for steps you can take to protect yourself here.

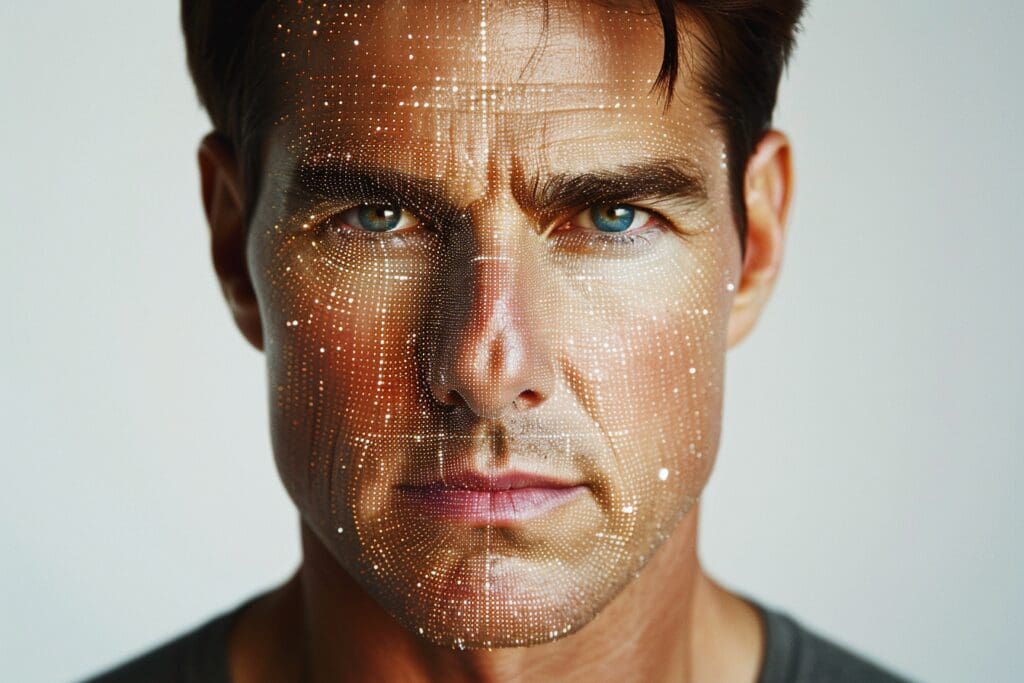

Deep fakes often combine voice cloning with AI powered facial swapping. One of the most famous deepfakes was created by Chris Ume, a visual effects artist who used AI to digitally map superstar actor Tom Cruise’s face onto a model and create a viral Tik Tok video sensation. Some Deep fakes may not use face swapping and may rather create a photo or video entirely of generative AI content or what is known as synthetic media. When criminals use these types of deepfakes to commit identity theft it is known as ‘identity hijacking’.

In February 2024, the AFP reported that a Hong Kong finance worker was scammed into wiring $26M to cybercriminals who used identity hijacking and impersonated senior executives on a video call. The only real person on the call was the finance worker. Everyone else was a deepfake.

The Federal Reserve defines synthetic identity theft as “a crime in which perpetrators combine fictitious and sometimes real information, such as SSNs and names, to create new identities to defraud financial institutions, government agencies or individuals”.

Fraudsters might start with an actual social security number—often from individuals less likely to monitor their credit, such as children or the elderly— and pair it with fabricated details like AI generated drivers’ licenses or other identifying documents. This concoction of real and false information is then used to apply for credit, establishing a credit profile and then over time, maxing out all available credit before the fraudster disappears.

The synthetic identity thief leaves behind substantial financial losses. The elusive nature of synthetic identity theft makes it one of the most challenging types to detect and combat. For victims, particularly those whose personal information was used without their knowledge, the consequences can surface years later, complicating recovery and resolution. As digital access to personal data increases, so does the prevalence of this type of fraud, highlighting the critical need for vigilance and advanced protective measures by individuals and financial institutions alike.

According to a forecast by the Deloitte Center for Financial Services, losses from synthetic identity fraud are expected to reach $23 billion by the year 2030.

One relatively simple measure individuals can take is to regularly check their credit reports for new accounts, new credit inquiries and unexpected changes in balances. Any errors on the report should be disputed promptly, in writing. For pointers on how to properly dispute innaccurate credit reporting, click hewre.

If the dispute is not resolved favorably, the consumer should consider contacting an attorney who regularly litigates Fair Credit Reporting Act cases on behalf of victims. Representing victims of inaccurate credit reports is one Schlanger Law Group’s core practice areas.

Although these are new forms of identity theft they still fall under the umbrella of two powerful consumer protection laws: the Electronic Funds Transfer Act (EFTA) and the Fair Credit Reporting Act (FCRA): These laws provide crucial safeguards to protect consumers and assist them in managing the repercussions of identity theft.

Under the EFTA, consumers are protected against unauthorized electronic fund transfers, which is increasingly pertinent in cases like voice cloning scams or synthetic identity theft where perpetrators may attempt to initiate unauthorized transactions. The Act offers the strongest level of protection to consumers who report the unauthorized transfers to their financial institution within two business days of learning of the problem and within 60 days of the date a monthly statement containing the charge is transmitted to the consumer. Where the consumer waits more than two business days to inform the bank but is still within the 60 day rule, the consumers liability is capped at $500. Where the consumer waits longer than 60 days, more complicated and less protective rules apply. To read more about these rules regarding liability for unauthorized transfer’s click HERE.

The FCRA emphasizes the accuracy and privacy of consumer information in credit reporting. It grants consumers the right to access their credit reports and dispute any inaccuracies, which can be vital when dealing with synthetic identity theft or any fraud that misuses their credit information. Where a consumer reports inaccurate information, the credit reporting agency is required to conduct a reasonable investigation, which must include verifying the information with the entity that furnished it. The FCRA creates a private right of action, allowing consumers to sue and recover against credit reporting agencies that fail to conduct a reasonable investigation of the consumer ’s disputes. Similarly, the consumer can proceed in Court against a data furnisher that falsely verifies account information during the course of the credit reporting agencys investigation. The FCRA also requires the credit reporting agencies to create reasonable policies and procedures to prevent reporting of inaccurate information.

Together, the EFTA and FCRA create a powerful framework that empowers consumers to defend against and rectify the damage caused by AI-powered identity theft. By ensuring that financial institutions and credit reporting agencies adhere to stringent standards for reimbursing identity theft losses and handling consumer information and disputes, these laws not only help to prevent fraud but also support consumers in recovering from any incidents that do occur. As AI technology continues to evolve, the enforcement of these laws will be essential in safeguarding consumers against increasingly sophisticated threats.

Written by: Schlanger Law Group

Schlanger Law Group In The Media

Reach out to Schlanger Law Group for a free consultation, and let’s discuss your case with no upfront fees.

The information on this website is for general information purposes only. Nothing on this site should be taken as legal advice for any individual case or situation.

This information is not intended to create, and receipt or viewing does not constitute, an attorney-client relationship.

ATTORNEY ADVERTISEMENT | Past Results Do Not Guarantee Similar Outcomes in the Future

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

WEBSITE BY: VISIONTRACTION